Testing version live*

On Saturday night approaching 11pm, I set up a Netlify account, connected to my GitHub and launched my initial test version as an actual real website.

I made it.

I hit my goal for these two weeks of bringing my idea to life with an example test day of data.

Without having written a single line of code.

I can’t believe it.

It’s been an incredibly long two weeks. It feels like I’ve been away from work for a month, and everything feels slightly foreign as I try to get back in to the swing of things.

So how did my second week go?

(note: similar to last week my memory is a bit hazy, so some events may have been repressed, and experiences merged together…)

*with layout issues and some bugs, annoyingly.

Persistently persistent

I think my biggest take away is that this is the most persistent I’ve been in a very long time.

It would have been very easy to give up in the face of so many frustrations and chasing-my-tail moments, but I didn’t.

Probably because I didn’t want to give up, to waste two weeks of vacation, and because I had a very clear plan and objective.

I don’t work out, run, keep fit … being persistent like this is a muscle I don’t use that much, to be honest.

There were no 3am finishes in the second week, but still long hours.

Sunday was the first time in 13 days that I didn’t work at my desk on hold!

Things started better

After the horrific first week that actually ended okay with:

the main game screen laid out

clue mechanics working

the hold! button progressing the price chart

the price chart y-scaling and working correctly

the week began well, with finishing off the “post-sell” mechanics and animations which I considered vital for the emotional dopamine and come-down catharsis flow of the game.

Things got nitty-gritty animating the post-sell chart, the appearance of the player’s achievement (went round in some loops trying to get the timing working correctly) BUT it was all much more straightforward and a LOT less painful than before.

I would even say enjoyable.

I added in some lovely GSAP animations to text counting up on clue reveals and final achievement reveal.

With the game screen done, it was on to:

Stats (where players see how everyone did that day, and their own streak history etc)

Info (where they learn the company identity, current prices and take in some ‘diversify for the long-term’ thoughts).

Footer

Modals (How-to-play, settings and about)

Because these were static pages with no animations or interactivity, I was hoping they’d be a lot simpler to build out.

I was mostly right.

There were some database hiccups (before some major ones later, explained below…) but in general it was … again … so much more straightforward than week one.

One magic moment

Adding in the Settings modal was a real “wow” where I think for the first time in the whole two weeks I just prompted:

OK … now add the Settings modal

and it just … did it, perfectly. Working.

I couldn’t believe it.

I don’t know if it was because of my original requirements document, or the AI’s training set just works for this specific (common!) use-case … but wow.

If only the whole two weeks was like that!

Connecting the front-end to the back-end

So after a few days I had all of my content built and functionality working, in a front-end sense.

I mean that clicking buttons ‘did things’ and took you places, but none of this was actually connected to the back-end.

So that’s when it became time to plug ‘em together.

And that’s when the nightmares of last week came back…

RLS nightmares

There’s essentially two steps that need to happen with the database (remember, the actual game data is just a ‘static file’, requiring no database fetching etc) and they are:

identify the user loading the page, and show them the relevant ‘splash’ screen

i.e. are they a fresh user? Do they need to accept terms? Have they already played?

when the user plays a game, save that data back to:

a separate table that keeps a row per-player-per-day

updates the main player’s row with their overall stats

Both of these require judicious use of Row Level Security (RLS) so that each player can only find, read and update their own data.

Because I’m not starting with Accounts, my original documentation and plan was to use cookies.

Each cookie would store a unique identifier, and that identifier would be the authorisation for said finding, reading and updating of data.

That was the plan, anyway.

That’s what I thought the AI had set-up during our ‘scaffolding’ phase, and RLS policies setting up (the term “RLS policies” is one I will hopefully not hear again for a very long time) … but…

… things were simply. not. working.

New players vs. returning wouldn’t be identified properly … games wouldn’t be saved properly.

It was back to error, error, error.

I was reminded of my half-baked knowledge of The Odyssey, when the hero is nearing home after his arduous journey only to get thrown back off-course and delaying him further.

I think this was … Thursday, by this stage.

I actually cut myself some slack and had a quick laser-tag outing with some friends (unbelievably I won a game, I seriously thought I was too exhausted to walk, let alone run around shooting laser guns).

I thought Friday would be a quick case of zapping these damned RLS issues just like I zapped my laster-tag enemies (did I mention I won a game?).

However…

Never Forget to Google

One key thing I’ve learned is that you still just can’t beat a good ol’ Google search.

Never forget - these LLMs’ training data can only ever be so recent.

So when I googled all these cookie based RLS issues I was having, one of the main hits that was returned was a supabase page all about a new feature they have called anonymous users doing EXACTLY what I needed to do.

And using that approach would solve all of the issues I was having.

Which was a case of… 🙄🙃🤦♂️

This meant making a huge change to our supabase setup.

Little did I know, that would only be one of the major changes I’d have to make as I neared the end of my two weeks.

Not ideal timing…

Two major changes

Anonymous users & supabase

After getting the AI to read the supabase doc about their native functionality, it agreed that embracing it was the best idea.

Getting the AI to integrate fully with supabase so it could just “do” all this stuff directly without me needing to be involved was a constant pain during these two weeks.

I don’t know why, but it would never remember its direct connections it had at its disposal.

It might be just not understanding how it can only use certain connections for certain types of things.

But it kept making SQL code to then send over to supabase as a transfer file, which would clog up our git commits and leave lingerings things all over the place.

So muggins me had to click around in full on numpty mode in the supabase web UI to get things sorted.

Out with cookies, in with the native anonymous users approach.

My eyes were also drawn to this explicit warning of theirs:

I don’t know what any of it meant, but I told the AI and it agreed we should use “dynamic rendering”.

So that was also some refactoring it had to do.

By this stage I was so anxious the AI could overwrite all of the great work it had done, I was including:

…in a surgical way, being extremely careful not to edit or overwrite other functional code that does not need to change…

in every prompt.

It was back to “flux capacitor, fluxing…” mode as it just … did all the changes step by step.

Thankfully, without issue, more-or-less.

With our supabase woes sorted, now I had functionality working and connecting to the back-end.

A new player would be correctly identified vs. a returning player … games were saved … everything was working.

Until I realised the next major change we had to make, with one or two days remaining…

Truly single page

If you play NYT’s Connections, or Minute Cryptic or any daily game, you’ll notice that despite the splash screen, the main game screen and the post-game stats you never see a different URL in the bar.

You never see (or can navigate to) https://www.nytimes.com/games/connections/stats for example, to remind yourself how you did.

THIS is the experience I was hoping to achieve, and what I had thought (just like the RLS policies) we had setup during our day 1 scaffolding.

It was explicitly in my documentation at the start.

During build and testing, I was navigating to /game, /stats, /info … but I thought that was just a testing ability that would somehow not be ‘a thing’ for the live game.

I was wrong.

I forget what I was trying to fix, but from what the AI said I suddenly twigged…

… my game was not architected in a truly single page way.

There would be separate pages the user could navigate to.

Not only was this unwanted, it was also making our data-fetching and process hugely more complicated to allow for the various scenarios.

When I persisted with getting the AI to realise this wrong and the requirement was for truly everything to be on a single page, it said this would be a huge undertaking to refactor the whole experience.

🙄🙃🤦♂️

We made a new “git branch”, which basically meant our current working functionality would remain untouched while we (that is, the AI) refactored all our code.

We can turn once again to the always relevant Back to the Future to visualise this:

The AI did all of these changes in a matter of minutes.

I have the suspicion that this kind of stuff would be a real pain for actual developers to do.

But after ironing out some kinks, and testing … we had done it.

We had a working, single page experience.

The last thing on Saturday afternoon was to add in some basic responsiveness so the page contents made best use of laptop/desktop screens.

Then one final push of my code to my remote ‘place’.

Set up a Netlify account, link to my code, point my domain at the Netlify service…

… it was live.

The security certificate was still pending, which meant that it wouldn’t be https:// - the main effect for me meant that the share button on mobile wouldn’t work as it uses a thing called WebShare API to use your device’s native ‘share tray’ to let you share your score directly into WhatsApp etc.

But the AI assured me that come morning, it would be working.

So on Saturday night, about midnight (with the clocks going forward meaning I would lose one more hour of sleep!) I went to bed.

Done.

I had taken two weeks off work with this specific goal, to get my game built and live with a single example day of data.

And I had just about managed it.

It’s easy to overlook the incredible feat of having turned my ideas (which were complex and required lots of custom work!) into a live, working website without writing a line of code.

Perhaps one year ago this would have been impossible.

It would be silly to not take a moment to be amazed and thankful, and to instead dive straight in to what I found most frustrating about my first attempt at vibe coding…

Recurring frustrations

… but we’re only human, eh.

So what has stuck in my memory from the past two weeks?

There’s so many memes I could make…

Read the Rules!

I’m sure that Cursor wasn’t always reading my rules I had created and updated throughout my two weeks. It’s supposed to, but little things here and there hinted at me it doesn’t always strictly follow every command in there.

Stop Deleting Stuff!

so many times I would prompt Cursor to make a simple update, and it would just … delete stuff. Once I asked it to tweak two specific lines of the how-to-play text …… and it just wiped the whole lot and only put in those two lines.

I lost count of the number of times it randomly reset and recreated my buttons.

the ones on the Splash page especially, I would notice too late that at some point recently it had written over our proper ones with slightly wrong versions it just … made up.

I never took the time to actually read a Cursor guide, so I was never fully sure what the AI was doing when it was … doing stuff.

e.g. when it changes code, some lines are highlighted red. Has it deleted those lines? They looked important! 🤷♂️

Do things at the right level, given our setup

Another thing I lost count of was the number of times it tried to create a new component with proper light mode / dark mode theming … and it just kept on getting it wrong.

because we were using Tailwind, there was a certain ‘way’ it had to do it.

After a few times, I got it to write a specific doc for how it should do it in the future …. but it never quite seemed to remember it properly.

Colours was another annoyance. I was never really sure where, or at what level, our colours were setup.

I think this is where the massive usability gains of the simple text box for prompting could be improved.

e.g. as I start typing ‘use the colour primar…’ some kind of auto-detect should popup and correctly identify the ‘primary purple’ colour I’m meaning, so that I can be sure - and the AI is sure - of what I’m on about.

A few times getting the styling right took multiple attempts because it was choosing non-existent colours.

Supa-annoying supabase

I don’t know if it was just me doing something wrong, but getting the AI to remember it could directly work with our supabase setup was so frustrating.

Time and time again it would try to do annoying workarounds and I would just type yet again ‘check our database doc, you can connect directly'.’

Tunnel vision

The biggest horror of all the horror shows was week one’s crisis of a broken localhost. This turned out to be because Node had somehow become out of sync with Next.js

the AI spent hours chasing its own tail trying to fix Next.js because it could see we were getting Next.js errors

It took me a simple google search to see that we should maybe check Node.

Turns out, that was the problem all along…

KISSing

Week 1 started horribly mainly because, and I don’t know why, the AI took an overly complicated approach to our database setup.

On other occasions too it kept diving into some really complex thinking and I kept having to say … keep it simple, stupid!

Dory

Sort of the common thread throughout all of these is the seeming lack of memory.

I think this is inevitable given the probabilistic nature of LLMs, and especially when getting them to create deterministic things (e.g. code).

It’s good, we got there in the end, but my god it’s annoying when it just - and you never know why - forgets some important detail about your setup, your codebase, or anything and romps off into the distance doing something completely wrong. Nooooo, come back!

If I could code, I would have. I’m sure it would’ve been quicker, and a lot less frustrating.

But I can’t, so I didn’t.

Perhaps in the future I’ll use an add-on like Builder.io or maybe try a different tool, or maybe Cursor itself would have upgraded - competition in this space is fierce right now!

I definitely think there needs to be a graphical user interface part to this, whereby I can select the part of the page that is wrong, and select the styling it should be using, just like in Figma.

By the final days I did actually feel the urge to find out what on earth all the files it had made were, and where I could just find the relevant bits of code and try to change them myself.

That’s the other thing - constantly explaining in written text what is wrong, or instructing what you want, asking for changes ….. it. is. ex. hausting.

Taking a step back

So now it’s live, I’m getting feedback and it’s clear there’s a bunch of layout issues which are 😤 REALLY ANNOYING 😤 because it prevents people from testing the game without me pointing things out.

Chrome inspector only gets your so far!

It’s also a bit galling because as a UX designer to still make some basic oversights is silly. And yet - that’s the core tenet of user experience design - to build and test early so it’s easier to fix.

But for now … I’m just listening, capturing, taking it all in.

I’m exhausted and spent, currently with not much appetite for rushing back to Cursor and implementing fixes and building the back-end scheduled automated programs that actually create all the game data.

There were moments during the two weeks where I really nearly cried with joy when my idea was becoming a real alive thing for the first time.

But now it is a live thing, albeit a testing version with lots of improvements to make, I can’t escape the feeling of … just being exhausted!

Not just in the tired sense, but all the things.

I don’t feel elated or deflated.

None of the lateds.

I think I need to refill my stock of emotional juice so that I can feel really happy and thrilled with what I’ve achieved.

I am not a developer, I didn’t write a single line of code … but I’ve made a thing!

Next Steps

First Improvements

work through feedback

come up with redesign plan

required bug fixes

implement an improved + fixed layout/experience

Size + scope up Backend programs

The current version only uses a single example day of data.

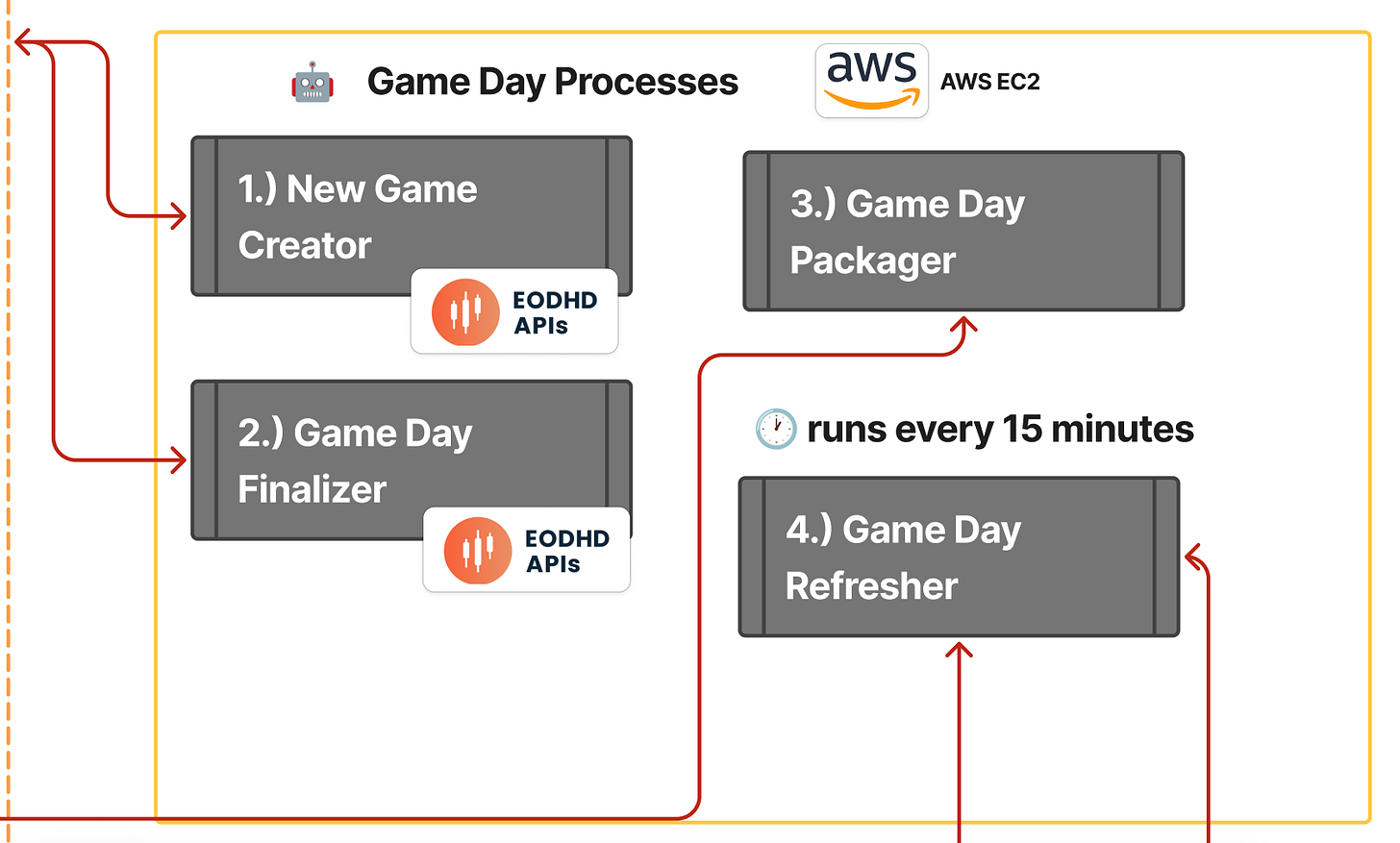

If this game is to become really real, I will need to make - I estimate - four automated “game day processes” as follows:

New Game Creator

this will connect to EODHD API’s (basically computers talking to computers, lovely jubbly) and ‘get’ the historical stock data to build each day’s game data of stock prices and company info.

I need to decide how this is done … randomly? Random year, random window, random company?

the trouble with this is that in general stocks go up over time, so I want to be careful that I don’t have too many windows that turn out the same.

so do I decide some ‘patterns’ that it should use as criteria for ‘selecting’ windows?

once this is made, it could then theoretically run at batches, making weeks or months of games at a time.

Game Day Finalizer

Roughly the day before a game goes live, this bad boy will again use EODHD to get the latest stock price of the upcoming day’s company stock. This is referenced in the Info part of the game, post-sell.

I also reference a world index, but annoyingly I do'n’t think an API exists. Perhaps some kind of ‘web scraper’ will be part of this that can fetch the latest world index value.

Game Day Packager

Then, all of the game day data that lives in supabase (that numbers 1 and 2 have created) will be packaged up into a flat text file and that is what each day’s game will actually use to power the gameplay.

So when you access it on a given day, you’re not making repeated calls to my database for everything required to run the game. It’s mostly local data on your device. But don’t go looking for it - spoilers! 🤫

Game Day Refresher

the final one is for the Stats post-sell view, where you get to see how you did vs. everyone else. This is much simpler than the other three, and will just:

amalgamate the row-per-player-per-game data into overall chart data

update just that part of the flat text file that number 3 made.

this means someone waking up in New York and playing will see how they did vs. everyone else before them, while at the same time…

…if someone who played that morning in London checks in again - they will see the same updated stats.

And that …. THAT is “all I have left to do”.

A considerable amount of work.

Which is why this first feedback phase is so important - can I gauge that the core gameplay is enticing enough for a daily game?

What’s funny with demographics is that I only know a couple of people who play — for example — NYT’s Connections.

And yet I think it gets about a million players each day?

That’s a big player-base to “do something” with, especially just for me!

Soon I’ll be writing up a case study of Minute Cryptic, which is very much my aim for hold!

But for now… two weeks are over.

It’s done.

😮💨 ☺️